Self Hosting Project (DevOps Infra from Scratch)

About this Project

A Background Story

So before I eventually sold my soul to Kubernetes, I decided that a reasonably comprehensive IaaS project was in order. I wanted to make good use of just solid, basic, Networking, Security, Scripting, then Terraform, Ansible, Docker, Traefik and Wireguard (and all the concepts they stand for), for a complete project. Since Kubernetes has been the primary tool in my ’to-learn’ list for a bit, I thought, well, why not deploy all the neccessary tools I’d need for my shift into using it in a pre-cursor project? That felt like a great idea!

So the little twist was I had that Idea for a while, till I had reason to quickly get Infrastructure up and running that’d allow me host my own Vaultwarden password manager, my Bitwarden subscription at the time was about to expire. Bitwarden online is in-expensive, but I didn’t have the $10 to renew it at the time. What I did have, was some cloud credits on DigitalOcean, a domain name, free Cloudflare, the idea for this project, and reasonable IT infra skills.

So for this project, there were 5 all important goals (and a 6th):-

- COMPLETE AUTOMATION (End-to-End set it off and go sleep type of stuff)

- COMPLETE VISIBILITY (Nothing moves an Inch in the environment without being spotted, and penned down)

- COMPLETE REPRODUCIBILITY (All important data backed-up fully. Accompanied by a set off and go dating restore policy)

- GOOD SECURITY (Private VPN and SSH keys only accessible. Complete firewall lock-down, secure Docker-to-host communication)

- Do it in the CHEAPEST, SELF-HOSTED, OPEN-SOURCE way possible (On a single VPS fit my bill)

- Do it from SCRATCH

In this project, I’ve deployed the 5 most common services one would typically need to drive a k8s environment (and a few additional, personal things, including an LLM frontend I call Jarvis 😉).

- A Private Git hosting service (Gitea)

- A CI/CD tool (Jenkins)

- A Secrets Management tool (Harshicorp Vault)

- A Private Artifactory (JFrog Container Registry)

- A Vulnerability scanning tool (SonarQube)

With these, you could host private code on Gitea, build with Jenkins, scan (code and image scans) with SonarQube and Trivy, release to the self-hosted Artifactory, and deploy to Kubernetes or any other environment. All of this, while securing your secrets both within your Pipelines and Kubernetes environment(s) through a central Vault instance.

To achieve the second goal I deployed a Monitoring and Observability stack that provides complete insight into both the Host Server and all Container environments.

- Prometheus and cAdvisor for Metrics collection

- Loki and Promtail for Log aggregation

- Grafana for both Metrics and Logs Visualization

Concerning Security, since this Infrastructure is meant to be the backbone of DevOps operations for an organization and hence Internal, it’s environment is completely locked off of the public Internet, and accessible only via a VPN. The project also instruments a custom SSH port, effectively protecting the environment from most automated, random, Internet attacks. Additionally secure Docker to Host communication via suitable IPTables rules is implemented, ensuring proper isolation is maintained between the Container and Host environments.

Where’s the source code?

I’m very available (highly eager) to help your Organization put this Infrastructure or a close representative to commission. I’ll port this to any environment (cloud or on-premise), with the guarantee that none of the core features listed out above will be missing or in-complete. Bring them gigs 🆒

What’s next for me?

Now with the power vested on me (by someone), I officially declare myself a Kubestronaut. From now I go on living and breathing Kubernetes, lol. So next I’ll go ahead with some Juicy k8s projects I’m cooking up with a few friends. We’ll be porting a fairly complicated microservices application to k8s from scratch (via DevSecOps). Creating k8s clusters, fitting them up with ArgoCD and going crazy with GitOps, come November.

If you loved this project, dont forget to drop a Like and Follow for more ‘content’ like this. Also let me know in the comments what you think about the project and how you believe I could have made it better.

IF you’d like to repeat this project

- Be warned, I have not written instructions on how to do anything.

- All you’ll find are instructions on what to do. I wrote down these steps as I worked on this project with friends. Ideally these instructions should help you get this done in a sane way.

- All the fun’s in figuring out the how for any part of the project you’re unfamiliar with.

Project Prerequisites

- A domain name

- Access to Cloud hosting

- A Cloud key store

- One (or more) Cloud storage bucket(s)

- Some IT know-how

Important notes on the Project’s structure and organization

The Controlling and Target Cloud Accounts

This projects assumes the existence of two different Cloud platform accounts. One for the target resources and the other for ultimate admin access over those resources deployed on the target account.

The most important parts of this project are namely the;

- Terraform State (for obvious reasons)

- Server SSH Keys, which Ansible or other person would need to connect to the Server.

- The Backup Archives

- The Hashicorp Vault Auto-Unseal keys

With these four things, and the code implementation, the entire system could be reproduced entirely, from scratch.

As a result, during this project I assumed that there’d be an Individual (or a set of Individuals) with Super Admin Access over the entire company’s base (operational) Infrastructure. These would (ideally) be key stakeholders such as the CEO or the Head of Engineering. They’d be the persons with the ultimate (proverbial) keys to the kingdom.

In practice, the “controlling” and “resource” accounts can take various forms and configurations, depending on your specific setup and requirements. These accounts could represent different entities across multiple aspects of your cloud infrastructure. Here are a few possible scenarios:

1. Multi-cloud: The accounts could belong to different cloud platforms altogether. For example, you might have your controlling account hosted on Azure, while your resources are deployed on a separate cloud provider like Digital Ocean. This allows you to leverage the unique features and services offered by each platform.

2. Same cloud platform, different accounts: The accounts could be separate accounts within the same cloud provider. This setup is common when you want to maintain isolation between different environments, such as production and development, or between different departments or projects within your organization.

3. Same cloud platform, different organizational units: In some cloud platforms, like Azure with its resource groups or Google Cloud with its projects, the accounts could represent different logical groupings within the same cloud account. This allows you to organize and manage resources based on specific criteria, such as project, environment, or application.

I took the Multi-cloud approach, my resources are deployed at Digital Ocean, while my Controlling Account is on Azure.

On Deploying Other (Extra) Services

As you’ve likely noticed, in addition to the core tools, I deployed the VaultWarden Password Manager and AnythingLLM applications. These tools were deployed for me. You’re free to get rid of them and deploy any other tool of your choosing.

General Overview

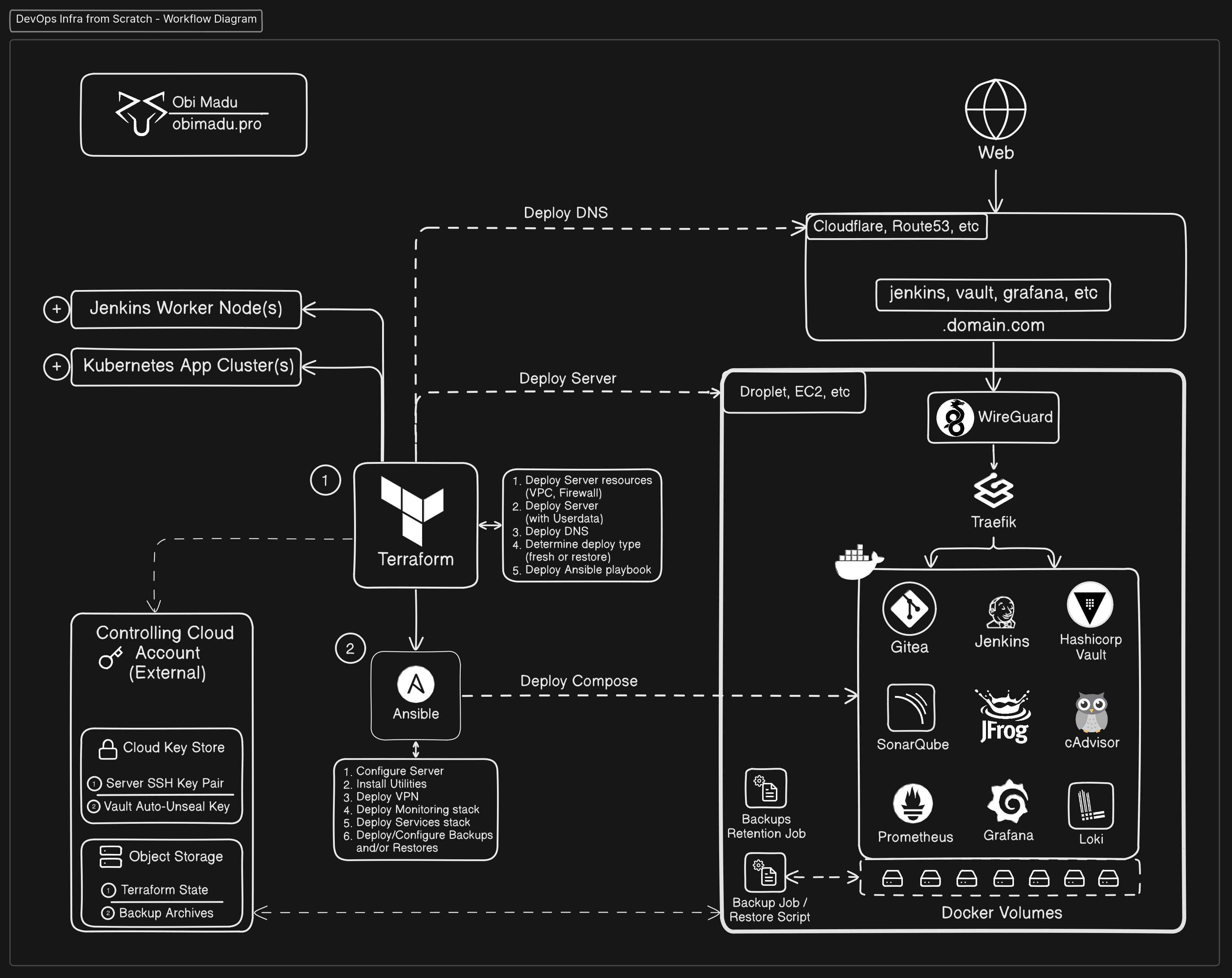

In the spirit of a general overview, below are the functions of each of the three core components of this project;

Terraform to deploy;

- Server resources (VPC, Firewall/SG)

- Server at DO/AWS/etc, with Userdata script

- DNS records at Cloudflare/etc

- Ansible playbook

Ansible to playbook to;

- Configure server (Hostname, Networking/Security rules)

- Install Utilities (s3cmd, prometheus-node-exporter)

- Install & Configure Docker

- Install & Configure WireGuard VPN

- Deploy Docker compose (Services and Monitoring stacks)

- Scripts and jobs for Docker volume backups

- Scripts for Docker volume restore

Docker Compose to Deploy;

- Traefik gateway (HTTP, SSL, HTTP-HTTPS Redirects, IP-Whitelist)

- Gitea

- Jenkins

- Hashicorp Vault

- JFrog Container Registry

- SonarQube OSS

- Prometheus

- Loki & Promtail

- Grafana

- Vaultwarden

- AnythingLLM

Task Groupings

These task groupings were very key contributors to the fact that I finished this project. I drew them each day that I worked on the project with my friends. They’re a big reason I didnt give up. In most (if not all cases) they represent daily goals. Do them in order, and you’ll have yourself fully functional Infrastrucure.

Task 1

Goal: Bootstrap Infrastrucure via Terraform

- Single server at DO/AWS/etc via local state

- Firewall/Security Group

- DNS records at Cloudflare/Route53, etc for future services (jenkins, etc)

Task 2

Goal: Setup remote state for Infra. Make sure Ansible can access, and deploy to server resource.

- Configure remote state for Terraform

- Configure Terraform to deploy dummy Ansible playbook2.1 Create dummy Ansible playbook2.2 Create ssh key-pair for server access2.2 Configure Ansible user account on server via cloud-init userdata script. Userdata script creates an ‘ansible’ user account, and configures a known-host key (with the public key generated on step 2.2).2.3 Use our private key to run the dummy playbook on the server via a Terraform local-provisioner.

Task 3

Goal: Learn to deploy Docker Compose via Ansible. Learn Traefik basics.

- Create a docker-compose file that deploys a temp Jenkins instance to the url

jenkins.your-domain.comusing a Traefik reverse-proxy container. Deploy only to http. No need for any persistence via Docker volumes yet. - Deploy docker-compose file via an Ansible role. Deploying via a role is compulsory. Remember to get rid of the previous dummy deployment now, it isn’t needed anymore.

Task 4

Goal: Implement basic system security.

- Configure our server to use a different SSH port.

- Disable SSH Password login for all Users

- Disable SSH Root login

- Modify our firewall rules to close off port 22 and open up the new SSH port.

- Configure Ansible to connect via the new ssh port.

- Add https to our Docker compose deployment.

Task 5

Goal: Configure host server monitoring. Upgrade our containers workload environment.

- Install a Prometheus node exporter on the host server via Ansible.

- Install Prometheus and Grafana to our container workloads.

- Create a new Docker network with the bridge driver, assign any private /24 address as it’s subnet. Assign all container workloads to this network.(If you like, create different compose files for the monitoring stack, and the services stack. This is infact advised, as all these services on a single compose fill will look maybe a bit messy by the end).

- Create appropriate Docker volumes for each compose service, and assign these volumes to the services.

- Add our host machine as a metrics target in the Prometheus service, via Ansible.

- Configure Grafana to get our Prometheus Metrics.

- Add a Grafana dashboard to monitor our host server. This, for now, is just to ensure the setup works. It will be temporary.

Task 6

Goal: Implement backups.

- Write a script to backup all docker volumes and upload them to a remote bucket.1.1 Script should mount each volume into a temporary container1.2 Tar and Gzip it’s content. Keeping all file and dir ownership info intact.1.3 Save archive in a ‘backups’ dir. Use a ‘volume-name-timestamp.tar.gz’ file naming convention.1.4 When all volumes are archived. Upload everything in the ‘backups’ folder to the cloud storage bucket you’ve designated for backups.

- Use a cron-job to make this backup script run twice daily, at any two times of your chosing, via Ansible.

Task 7

Goal: Create a backups retention policy.

- Write a script to delete all backups older than a specified number of days. Number of days should be a parameter passed in to the script at call-time.1.1 For instance, if the script is called with a ‘5’ parameter, it should delete all backups not within the time-frame of the past 5 days. It should keep only 5 days worth of recent backups.Optionally, you could chose to always keep the most recent backups of each volume.

- Via Ansible, create a cron-job to run this ‘cleanup’ script once daily. Keep 7 days worth of recent backups.

Task 8

Goal: Setup a Logging Infrastructure.

- Modify your backup and cleanup scripts to log relevant actions to stdout.

- Modify your backup and cleanup cron-jobs to pipe all outputs and errors, with relevant tags, to the syslog.

- Deploy Promtail and Grafana Loki via Docker compose. Mount the ‘/var/log’ directory read-only into the Promtail container.

- Using Ansible, write a Promtail config file to scrape logs from the the /var/log directory, and send them over to the Loki service.

- Install the Loki docker plugin into the host server using Ansible. Configure the Docker daemon to use Loki as the default logs driver by editing the /etc/docker/daemon.json file appropriately. Remember to restart the Docker service and re-create all the containers after this step.

- Add Loki as a data-source to Grafana. Confirm you can visualize logs from the /var/log dir and all the docker containers running on the host via the Grafana ‘Explore’ tab.

Task 9

Goal: Complete Services Deployment.

- Deploy Gitea via docker-compose

- Deploy Hashicorp Vault via docker-compose

- Deploy JFrog Container Registry OSS docker-compose

- Deploy SonarQube via docker-compose

- Deploy cAdvisor to monitor host resource usage per container service

- Expose Prometheus metrics from Traefik service

- Create Grafana dashboards for Traefik, and cAdvisor

- Deploy any other service you like!

Task 10

Goal: Implement a backups restore policy.

- Create a backups restore script that runs only when the value of a ‘restore’ variable is equal to ’true’. The script should take in the bucket-name, date for the specific backup to be restored, and a ‘1’ or ‘2’ value indicating the first or second backup of the day should be restored.

Task 11

Goal: Privatize access to Infrastructure via WireGuard VPN.

- Install Wireguard to the Host server via Ansible.

- Through a combination of Ansible and Terraform steps, setup and configure a Wireguard Interface on the server, generate an appropriate config file for this Interface, configure the Interface with the config file, then generate appropriate client-config files for use connecting to this Interface.

- Configure Traefik, via an IP-Whitelist, to only allow traffic to all the services on connections initiated via the Wireguard VPN subnet.

Task 12

Goal: Deploy and Configure a Jenkins Agent VPS via Terraform and Ansible.

- Using Terraform, deploy an additional VPS server any size of your choosing to act as a build agent for your Jenkins instance.

- Configure your agent with Ansible, installing any tool Jenkins would need, such as a JDK package, and Trivy for Container Image Scanning.

- Using Ansible, install Wireguard on your agent and set it up as a Peer in your private VPN.

- (Optional) If you’d like to be able to access your services in Traefik using their FQDNs from the Agent, setup appropriate DNS records using Ansible on the Agent.

Conclusion

Voila! If your skills have carried you this far 😉 Then you have yourself a working copy of this Infrastrucure by now. If you loved this project, don’t forget to drop a Like. Also let me know in the comments what you think about the project and how you believe I could have made it better.